In the wake of an increase in cyber attacks against machine learning (ML) systems, Microsoft along with MITRE and contributions from 11 other organizations, have released the Adversarial ML Threat Matrix.

The Adversarial ML Threat Matrix is an open ATT&CK-style framework to help security analysts detect, respond to, and remediate threats against ML systems.

Machine learning (ML) is often seen as a subset of artificial intelligence (AI) and is based on the ability of systems to automatically learn and improve from its experience. Many industries, such as finance, healthcare, and defense, have used ML to transform their businesses and positively impact people worldwide.

With the ML and AI advancements, however, Microsoft warned that many organizations have not kept up on security of their ML systems.

Over the last several years, for instance, tech giants such as Google, Amazon, Microsoft and Tesla, were all victims of ML system attacks.

Furthermore, a Gartner report projected 30% of cyberattacks by 2022 will involve data poisoning, model theft or adversarial examples.

To make matters worse, a survey sent to 28 organizations revealed 25 of those did not even know how to secure their ML systems.

For these reasons, Microsoft and MITRE have published the new Adversarial ML Threat Matrix, a framework that “systematically organizes the techniques employed by malicious adversaries in subverting ML systems.”

The joint effort was developed by Microsoft and MITRE, along with tech companies, IBM, NVIDIA, Bosch and multiple other organizations.

Published on GitHub, the ML content consists primarily of:

- Adversarial ML 101

- Adversarial ML Threat Matrix

- Case Studies.

Adversarial ML 101

Keith Manville of MITRE describes Adversarial ML 101 as “subverting machine learning systems for fun and profit.”

“The methods underpinning the production machine learning systems are systematically vulnerable to a new class of vulnerabilities across the machine learning supply chain collectively known as Adversarial Machine Learning. Adversaries can exploit these vulnerabilities to manipulate AI systems in order to alter their behavior to serve a malicious end goal,” Manville added.

Manville also provided different examples of machine learning attacks, such as Model Evasion, Functional Extraction and Model Poisoning, to name a few.

Moreover, three attack scenarios along with diagrams were also provided: Training Time Attack, Training Time Attack and Attack on Edge/Client.

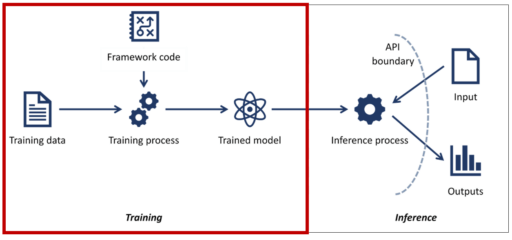

For example, the Training Time Attack is described in Figure 1:

Adversarial ML Threat Matrix

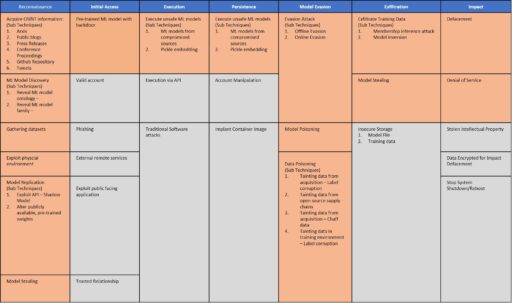

The structure of the Adversarial ML Threat Matrix is modeled after the ATT&CK Enterprise framework. For example, the matrix leverages many of the same column headers for “Tactics” (i.e., Reconnaissance, Initial Access, Execution, Persistence, Model Evasion, Exfiltration and Impact).

However, the revised matrix highlighted in Figure 2 adds different “Techniques” (see orange) that are specific to ML systems. Whereas, the remaining techniques (in grey) are applicable to ML and ML-systems and come directly from ATT&CK Enterprise.

MITRE also clarified that the Adversarial ML Threat Matrix is not yet part of the ATT&CK matrix.

Case studies

Finally, multiple case studies were also published to include two involving evasion attacks against Microsoft (Azure Service and Microsoft Edge AI).

Additional case studies were also documented, such as:

- Clearview AI (Misconfiguration)

- GPT-2 (Model Replication)

- ProofPoint (Evasion)

- Tay (Poisoning).

“Attacks on machine learning (ML) systems are being developed and released with increased regularity. Historically, attacks against ML systems have been performed in a controlled academic settings, but as these case-studies demonstrate, attacks are being seen in-the-wild,” Manville said.